Interaktive Datenanalyse und Visualisierung mit Python¶

Dr. Yves J. Hilpisch

The Python Quants GmbH

Pycon DE, Köln – 16. Oktober 2013

Python for Analytics¶

Corporations, decision makers and analysts nowadays generally face a number of problems with data:

- sources: data typically comes from different sources, like from the Web, from in-house databases or it is generated in-memory, e.g. for simulation purposes

- formats: data is generally available in different formats, like SQL databases/tables, Excel files, CSV files, arrays, proprietary formats

- structure: data typically comes differently structured, be it unstructured, simply indexed, hierarchically indexed, in table form, in matrix form, in multidimensional arrays

- completeness: real-world data is generally incomplete, i.e. there is missing data (e.g. along an index) or multiple series of data cannot be aligned correctly (e.g. two time series with different time indexes)

- conventions: for some types of data there a many “competing” conventions with regard to formatting, like for dates and time

- interpretation: some data sets contain information that can be easily and intelligently interpreted, like a time index, others not, like texts

- performance: reading, streamlining, aligning, analyzing – i.e. processing – (big) data sets might be slow

In addition to these data-oriented problems, there typically are organizational issues that have to be considered:

- departments: the majority of companies is organized in departments with different technologies, databases, etc., leading to “data silos”

- analytics skills: analytical and business skills in general are possessed by people working in line functions (e.g. production) or administrative functions (e.g. finance)

- technical skills: technical skills, like retrieving data from databases and visualizing them, are generally possessed by people in technology functions (e.g. development, systems operations)

This presentation focuses on

- Python as a general purpose analytics environment

- interactive analytics examples

- prototyping-like Python usage

It does not address such important issues like

- architectural issues regarding hardware and software

- development processes, testing, documentation and production

- real world problem modeling

Some Python Fundamentals¶

Fundamental Python Libraries¶

A fundamental Python stack for interactive data analytics and visualization should at least contain the following libraries tools:

- Python – the Python interpreter itself

- NumPy – high performance, flexible array structures and operations

- SciPy – collection of scientific modules and functions (e.g. for regression, optimization, integration)

- pandas – time series and panel data analysis and I/O

- PyTables – hierarchical, high performance database (e.g. for out-of-memory analytics)

- matplotlib – 2d and 3d visualization

- IPython – interactive data analytics, visualization, publishing

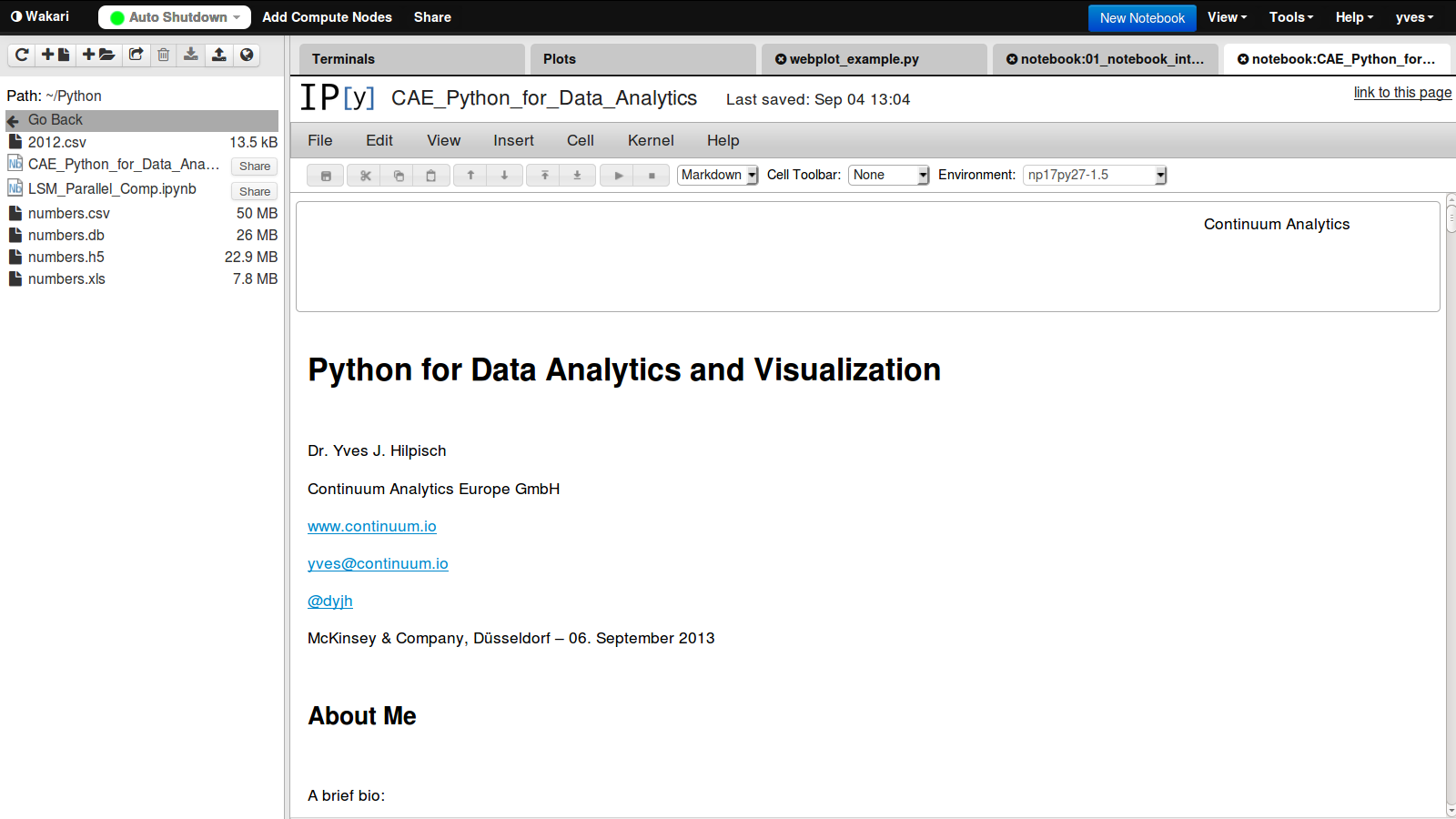

It is best to use either the Python distribution Anaconda or the Web-based analytics environment Wakari. Both provide almost "complete" Python environments.

For example, pandas can, among others, help with the following data-related problems:

- sources: pandas reads data directly from different data sources such as SQL databases, Excel files or JSON based APIs

- formats: pandas can process input data in different formats like CSV files or Excel files; it can also generate output in different formats like CSV, XLS, HTML or JSON

- structure: pandas' strength lies in structured data formats, like time series and panel data

- completeness: pandas automatically deals with missing data in most circumstances, e.g. computing sums even if there are a few or many “not a number”, i.e. missing, values

- conventions/interpretation: for example, pandas can interpret and convert different date-time formats to Python datetime objects and/or timestamps

- performance: the majority of pandas classes, methods and functions is implemented in a performance-oriented fashion making heavy use of the Python/C compiler Cython

First Interactive Steps with Python¶

As a simple example let's generate a NumPy array with five sets of 1000 (pseudo-)random numbers each.

import numpy as np # this imports the NumPy library

data = np.random.standard_normal((5, 1000)) # generate 5 sets with 1000 rn each

data[:, :5].round(3) # print first five values of each set rounded to 3 digits

array([[-0.744, -0.119, 0.054, 0.567, 1.443],

[ 1.57 , 0.474, 0.839, 0.322, -1.06 ],

[-1.274, -0.878, -0.483, -1.047, 0.087],

[-0.951, -0.364, -0.121, 0.51 , 0.76 ],

[ 0.746, -0.883, 0.582, 0.184, -2.084]])

Let's plot a histogram of the 1st, 2nd and 3rd data set.

import matplotlib.pyplot as plt # this imports matplotlib.pyplot

plt.hist([data[0], data[1], data[2]], label=['Set 0', 'Set 1', 'Set 2'])

plt.grid(True) # grid for better readability

plt.legend()

<matplotlib.legend.Legend at 0x105afc550>

We then want to plot the 'running' cumulative sum of each set.

plt.figure() # initialize figure object

plt.grid(True)

for data_set in enumerate(data): # iterate over all rows

plt.plot(data_set[1].cumsum(), label='Set %s' % data_set[0])

# plot the running cumulative sums for each row

plt.legend(loc=0) # write legend with labels

<matplotlib.legend.Legend at 0x105fcfa10>

Some fundamental statistics from our data sets.

data.mean(axis=1) # average value of the 5 sets

array([ 0.01184311, 0.01059239, 0.0334466 , 0.02569466, 0.0212998 ])

data.std(axis=1) # standard deviation of the 5 sets

array([ 0.9713451 , 0.98814151, 1.01958776, 0.97124342, 0.98936316])

np.corrcoef(data) # correltion matrix of the 5 data sets

array([[ 1. , 0.00222048, 0.02860959, -0.02405137, 0.02163565],

[ 0.00222048, 1. , 0.03714753, -0.00609016, -0.01790324],

[ 0.02860959, 0.03714753, 1. , 0.00753141, -0.01919302],

[-0.02405137, -0.00609016, 0.00753141, 1. , -0.03417233],

[ 0.02163565, -0.01790324, -0.01919302, -0.03417233, 1. ]])

General I/O Capabilities of Python¶

SQL Databases¶

Currently, and in the foreseeable future as well, most corporate data is stored in SQL databases. We take this as a starting point and generate a SQL table with dummy data.

filename = 'numbers'

import sqlite3 as sq3 # imports the SQLite library

# Remove files if they exist

import os

try:

os.remove(filename + '.db')

os.remove(filename + '.h5')

os.remove(filename + '.csv')

os.remove(filename + '.xls')

except:

pass

We create a table and populate the table with the dummy data.

query = 'CREATE TABLE numbs (no1 real, no2 real, no3 real, no4 real, no5 real)'

con = sq3.connect(filename + '.db')

con.execute(query)

con.commit()

# Writing Random Numbers to SQLite3 Database

rows = 5000000 # 5,000,000 rows for the SQL table (ca. 250 MB in size)

nr = np.random.standard_normal((rows, 5))

%time con.executemany('INSERT INTO numbs VALUES(?, ?, ?, ?, ?)', nr)

con.commit()

CPU times: user 1min 8s, sys: 1.2 s, total: 1min 9s Wall time: 1min 10s

We can now read the data into a NumPy array.

# Reading a couple of rows from SQLite3 database table

array(con.execute('SELECT * FROM numbs').fetchmany(3)).round(3)

array([[-0.333, 0.4 , 0.562, 1.242, 0.375],

[ 1.167, -1.407, 1.366, 1.576, -3.083],

[-0.091, -0.253, -1.078, 0.421, -1.411]])

# Reading the whole table into an NumPy array

%time result = array(con.execute('SELECT * FROM numbs').fetchall())

CPU times: user 38.4 s, sys: 1.74 s, total: 40.1 s Wall time: 41.5 s

nr = 0.0; result = 0.0 # memory clean-up

Reading and Writing Data with pandas¶

pandas is optimized both for convenience and performance when it comes to analytics and I/O operations.

import pandas as pd

import pandas.io.sql as pds

%time data = pds.read_frame('SELECT * FROM numbs', con)

con.close()

CPU times: user 17.2 s, sys: 1.94 s, total: 19.1 s Wall time: 19.5 s

data.head()

| no1 | no2 | no3 | no4 | no5 | |

|---|---|---|---|---|---|

| 0 | -0.332945 | 0.400482 | 0.561553 | 1.241988 | 0.375397 |

| 1 | 1.166627 | -1.406514 | 1.365680 | 1.575697 | -3.082749 |

| 2 | -0.090500 | -0.253373 | -1.077684 | 0.421027 | -1.410713 |

| 3 | -0.834979 | 0.707721 | 0.017805 | -0.289234 | -0.577511 |

| 4 | 0.098786 | 0.531318 | 0.322057 | 1.702852 | 0.569968 |

The plotting of larger data sets often is a problem. This can easily be accomplished here by only plotting every 500th data row, for instance.

%time data[::500].cumsum().plot(grid=True, legend=True) # every 500th row is plotted

CPU times: user 94.9 ms, sys: 5.04 ms, total: 99.9 ms Wall time: 107 ms

<matplotlib.axes.AxesSubplot at 0x11326d310>

There are some reasons why you may want to write the DataFrame to disk:

- Database Performance: you do not want to query the SQL database everytime anew

- IO Speed: you want to increase data/information retrievel

- Data Structure: maybe you want to store additional data with the original data set or you have generated a DataFrame out of multiple sources

pandas is tightly integrated with PyTables, a library that implements the HDF5 standard for Python. It makes data storage and I/O operations not only convenient but quite fast as well.

h5 = pd.HDFStore(filename + '.h5', 'w')

%time h5['data'] = data # writes the DataFrame to HDF5 database

CPU times: user 218 ms, sys: 340 ms, total: 559 ms Wall time: 925 ms

Now, we can read the data from the HDF5 file into another DataFrame object. Performance of such an operation generally is quite high.

%time data_new = h5['data']

CPU times: user 15.3 ms, sys: 209 ms, total: 225 ms Wall time: 226 ms

%time data.sum() - data_new.sum() # checking if the DataFrames are the same

CPU times: user 688 ms, sys: 186 ms, total: 874 ms Wall time: 874 ms

no1 0 no2 0 no3 0 no4 0 no5 0 dtype: float64

data_new = 0.0 # memory clean-up

The data is easily exported to different standard data file formats like comma separated value files (CSV).

%time data.to_csv(filename + '.csv') # writes the whole data set as CSV file

CPU times: user 44.9 s, sys: 925 ms, total: 45.8 s Wall time: 46.1 s

csv_file = open(filename + '.csv')

for i in range(5):

print csv_file.readline(),

,no1,no2,no3,no4,no5 0,-0.3329451499158752,0.4004816848758443,0.5615527394429235,1.2419879035108068,0.37539651544956537 1,1.1666272817521428,-1.4065143770283441,1.365679748720007,1.575697101033008,-3.082748727015887 2,-0.09050049767901604,-0.25337312223007863,-1.0776842585535016,0.4210272427386414,-1.4107126185503545 3,-0.8349787334130186,0.7077214018355428,0.01780482787772783,-0.28923390676036287,-0.5775107147440225

pandas also interacts easily with Microsoft Excel spreadsheet files (XLS/XLSX).

%time data.ix[:65000].to_excel(filename + '.xls') # first 65,000 rows written in a Excel file

CPU times: user 8.34 s, sys: 165 ms, total: 8.51 s Wall time: 8.52 s

With pandas you can in one step read data from an Excel file and e.g. plot it.

%time pd.read_excel(filename + '.xls', 'sheet1').hist(grid=True)

CPU times: user 2.1 s, sys: 42.2 ms, total: 2.15 s Wall time: 2.16 s

array([[<matplotlib.axes.AxesSubplot object at 0x11d024690>,

<matplotlib.axes.AxesSubplot object at 0x108b8b350>,

<matplotlib.axes.AxesSubplot object at 0x108bafc10>],

[<matplotlib.axes.AxesSubplot object at 0x1086a40d0>,

<matplotlib.axes.AxesSubplot object at 0x1086c0450>,

<matplotlib.axes.AxesSubplot object at 0x1086e4c10>]], dtype=object)

One can also generate HTML code, e.g. for automatic Web-based report generation.

html_code = data.ix[:2].to_html()

html_code

u'<table border="1" class="dataframe">\n <thead>\n <tr style="text-align: right;">\n <th></th>\n <th>no1</th>\n <th>no2</th>\n <th>no3</th>\n <th>no4</th>\n <th>no5</th>\n </tr>\n </thead>\n <tbody>\n <tr>\n <th>0</th>\n <td>-0.332945</td>\n <td> 0.400482</td>\n <td> 0.561553</td>\n <td> 1.241988</td>\n <td> 0.375397</td>\n </tr>\n <tr>\n <th>1</th>\n <td> 1.166627</td>\n <td>-1.406514</td>\n <td> 1.365680</td>\n <td> 1.575697</td>\n <td>-3.082749</td>\n </tr>\n <tr>\n <th>2</th>\n <td>-0.090500</td>\n <td>-0.253373</td>\n <td>-1.077684</td>\n <td> 0.421027</td>\n <td>-1.410713</td>\n </tr>\n </tbody>\n</table>'

from IPython.core.display import HTML

HTML(html_code)

| no1 | no2 | no3 | no4 | no5 | |

|---|---|---|---|---|---|

| 0 | -0.332945 | 0.400482 | 0.561553 | 1.241988 | 0.375397 |

| 1 | 1.166627 | -1.406514 | 1.365680 | 1.575697 | -3.082749 |

| 2 | -0.090500 | -0.253373 | -1.077684 | 0.421027 | -1.410713 |

Some final checks and data cleaning.

ll numb*

-rw-rw-rw- 1 yhilpisch staff 529668076 15 Okt 14:23 numbers.csv -rw-r--r-- 1 yhilpisch staff 272386048 15 Okt 14:21 numbers.db -rw-rw-rw- 1 yhilpisch staff 240007368 15 Okt 14:22 numbers.h5 -rw-rw-rw- 1 yhilpisch staff 8130560 15 Okt 14:23 numbers.xls

data = 0.0 # memory clean-up

try:

os.remove(filename + '.db')

os.remove(filename + '.h5')

os.remove(filename + '.csv')

os.remove(filename + '.xls')

except:

pass

Parallel Execution of Option Pricing Code¶

The algorithm we (arbitrarily) choose is the Monte Carlo estimator for a European option value in the Black-Scholes-Merton set-up.

\[dS_t = r S_t dt + \sigma S_t dZ_t\]

def BSM_MCS(S0):

''' Black-Scholes-Merton MCS Estimator for European Calls. '''

import numpy as np

K = 100.; T = 1.0; r = 0.05; vola = 0.2

M = 50; I = 20000

dt = T / M

rand = np.random.standard_normal((M + 1, I))

S = np.zeros((M + 1, I)); S[0] = S0

for t in range(1, M + 1):

S[t] = S[t-1] * np.exp((r - 0.5 * vola ** 2) * dt + vola * np.sqrt(dt) * rand[t])

BSM_MCS_Value = np.sum(np.maximum(S[-1] - K, 0)) / I

return BSM_MCS_Value

The Sequential Calculation¶

The benchmark case of sequential execution.

from time import time

n = 100

def seqValue():

optionValues = []

for S in linspace(80, 100, n):

optionValues.append(BSM_MCS(S))

return optionValues

t0 = time()

seqValue()

t1 = time(); d1 = t1 - t0

print "Number of Options %8d" % n

print "Duration in Seconds %8.3f" % d1

print "Options per Second %8.3f" % (n / d1)

Number of Options 100 Duration in Seconds 6.374 Options per Second 15.689

The Parallel Calculation¶

First, start a local cluster, if you have multiple cores in your machine.

# in the shell ...

####

# ipcluster start -n 4

####

# or maybe more cores

Enable parallel computing capabilities.

from IPython.parallel import Client

cluster_profile = "default"

# the profile is set to the default one

# see IPython docs for further details

c = Client(profile=cluster_profile)

view = c.load_balanced_view()

The function for the parallel execution of a number of different options valuations.

def parValue():

optionValues = []

for S in linspace(80, 100, n):

value = view.apply_async(BSM_MCS, S)

# asynchronously calculate the option values

optionValues.append(value)

return optionValues

def execution():

optionValues = parValue() # calculate all values

print "Submitted tasks %8d" % len(optionValues)

c.wait(optionValues)

return optionValues

t0 = time()

optionValues = execution()

t1 = time(); d2 = t1 - t0

print "Duration in Seconds %8.3f" % d2

print "Options per Second %8.3f" % (n / d2)

print "Speed-up Factor %8.3f" % (d1 / d2)

Submitted tasks 100 Duration in Seconds 3.060 Options per Second 32.676 Speed-up Factor 2.083

Analyzing Financial Time Series Data¶

Historical Correlation between EURO STOXX 50 and VSTOXX¶

It is a stylized fact that stock indexes and related volatility indexes are highly negatively correlated. The following example analyzes this stylized fact based on the EURO STOXX 50 stock index and the VSTOXX volatility index using Ordinary Least-Squares regession (OLS).

First, we collect historical data for both the EURO STOXX 50 stock and the VSTOXX volatility index.

import pandas as pd

from urllib import urlretrieve

es_url = 'http://www.stoxx.com/download/historical_values/hbrbcpe.txt'

vs_url = 'http://www.stoxx.com/download/historical_values/h_vstoxx.txt'

urlretrieve(es_url, 'es.txt')

urlretrieve(vs_url, 'vs.txt')

('vs.txt', <httplib.HTTPMessage instance at 0x109202ea8>)

The EURO STOXX 50 data is not yet in the right format. Some house cleaning is necessary.

lines = open('es.txt').readlines() # reads the whole file line-by-line

new_file = open('es50.txt', 'w') # opens a new file

new_file.writelines('date' + lines[3][:-1].replace(' ', '') + ';DEL' + lines[3][-1])

# writes the corrected third line of the orginal file as first line of new file

new_file.writelines(lines[4:-1]) # writes the remaining lines of the orginial file

print list(open('es50.txt'))[:5] # opens the new file for inspection

['date;SX5P;SX5E;SXXP;SXXE;SXXF;SXXA;DK5F;DKXF;DEL\n', '31.12.1986;775.00 ; 900.82 ; 82.76 ; 98.58 ; 98.06 ; 69.06 ; 645.26 ; 65.56\n', '01.01.1987;775.00 ; 900.82 ; 82.76 ; 98.58 ; 98.06 ; 69.06 ; 645.26 ; 65.56\n', '02.01.1987;770.89 ; 891.78 ; 82.57 ; 97.80 ; 97.43 ; 69.37 ; 647.62 ; 65.81\n', '05.01.1987;771.89 ; 898.33 ; 82.82 ; 98.60 ; 98.19 ; 69.16 ; 649.94 ; 65.82\n']

Now, the data can be safely read into a DataFrame object.

es = pd.read_csv('es50.txt', index_col=0, parse_dates=True, sep=';', dayfirst=True)

del es['DEL'] # delete the helper column

es

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 6907 entries, 1986-12-31 00:00:00 to 2013-10-10 00:00:00 Data columns (total 8 columns): SX5P 6907 non-null values SX5E 6907 non-null values SXXP 6907 non-null values SXXE 6907 non-null values SXXF 6906 non-null values SXXA 6906 non-null values DK5F 6906 non-null values DKXF 6906 non-null values dtypes: float64(7), object(1)

The VSTOXX data can be read without touching the raw data.

vs = pd.read_csv('vs.txt', index_col=0, header=2, parse_dates=True, sep=',', dayfirst=True)

# you can alternatively read from the Web source directly

# without saving the csv file to disk:

# vs = pd.read_csv(vs_url, index_col=0, header=2,

# parse_dates=True, sep=',', dayfirst=True)

We now merge the data for further analysis.

from datetime import datetime

data = pd.DataFrame({'EUROSTOXX' :

es['SX5E'][es.index > datetime(1999, 12, 31)]})

data = data.join(pd.DataFrame({'VSTOXX' :

vs['V2TX'][vs.index > datetime(1999, 12, 31)]}))

data

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 3532 entries, 2000-01-03 00:00:00 to 2013-10-10 00:00:00 Data columns (total 2 columns): EUROSTOXX 3532 non-null values VSTOXX 3512 non-null values dtypes: float64(2)

Let's inspect the two time series.

data.head()

| EUROSTOXX | VSTOXX | |

|---|---|---|

| date | ||

| 2000-01-03 | 4849.22 | 30.98 |

| 2000-01-04 | 4657.83 | 33.22 |

| 2000-01-05 | 4541.75 | 32.59 |

| 2000-01-06 | 4500.69 | 31.18 |

| 2000-01-07 | 4648.27 | 27.44 |

A picture can tell the complete story.

data.plot(subplots=True, grid=True, style='b')

array([<matplotlib.axes.AxesSubplot object at 0x11d036ed0>,

<matplotlib.axes.AxesSubplot object at 0x1185a0110>], dtype=object)

We now generate log returns for both time series.

rets = np.log(data / data.shift(1))

rets.head()

| EUROSTOXX | VSTOXX | |

|---|---|---|

| date | ||

| 2000-01-03 | NaN | NaN |

| 2000-01-04 | -0.040268 | 0.069810 |

| 2000-01-05 | -0.025237 | -0.019147 |

| 2000-01-06 | -0.009082 | -0.044229 |

| 2000-01-07 | 0.032264 | -0.127775 |

To this new data set, also stored in a DataFrame object, we apply OLS.

xdat = rets['EUROSTOXX']

ydat = rets['VSTOXX']

model = pd.ols(y=ydat, x=xdat)

model

-------------------------Summary of Regression Analysis-------------------------

Formula: Y ~ <x> + <intercept>

Number of Observations: 3491

Number of Degrees of Freedom: 2

R-squared: 0.5572

Adj R-squared: 0.5571

Rmse: 0.0378

F-stat (1, 3489): 4390.4561, p-value: 0.0000

Degrees of Freedom: model 1, resid 3489

-----------------------Summary of Estimated Coefficients------------------------

Variable Coef Std Err t-stat p-value CI 2.5% CI 97.5%

--------------------------------------------------------------------------------

x -2.7063 0.0408 -66.26 0.0000 -2.7863 -2.6262

intercept -0.0007 0.0006 -1.05 0.2949 -0.0019 0.0006

---------------------------------End of Summary---------------------------------

Again, we want to see how our results look graphically.

plt.plot(xdat, ydat, 'r.')

ax = plt.axis() # grab axis values

x = np.linspace(ax[0], ax[1] + 0.01)

plt.plot(x, model.beta[1] + model.beta[0] * x, 'b', lw=2)

plt.grid(True)

plt.axis('tight')

(-0.10000000000000001, 0.16, -0.43367327121515986, 0.43706494329021084)

Analyzing High Frequency Data¶

Using pandas, the code that follows reads intraday, high-frequency data from a Web source, plots it and resamples it.

date = datetime.now()

url = 'http://hopey.netfonds.no/posdump.php?date=%s%s%s&paper=AAPL.O&csv_format=csv' % ('2013', '10', '07')

# you may have to adjust the date since only recent dates are available

urlretrieve(url, 'aapl.csv')

('aapl.csv', <httplib.HTTPMessage instance at 0x10b68f950>)

AAPL = pd.read_csv('aapl.csv', index_col=0, header=0, parse_dates=True)

AAPL

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 13597 entries, 2013-10-07 10:00:01 to 2013-10-07 22:20:54 Data columns (total 6 columns): bid 13597 non-null values bid_depth 13597 non-null values bid_depth_total 13597 non-null values offer 13597 non-null values offer_depth 13597 non-null values offer_depth_total 13597 non-null values dtypes: float64(2), int64(4)

The intraday evolution of the Apple stock price.

AAPL['bid'].plot()

<matplotlib.axes.AxesSubplot at 0x10b69fc90>

A resampling of the data is easily accomplished with pandas.

import datetime as dt

# this resamples the record frequency to 5 minutes, using mean as aggregation rule

AAPL_5min = AAPL.resample(rule='5min', how='mean')

AAPL_5min.head()

| bid | bid_depth | bid_depth_total | offer | offer_depth | offer_depth_total | |

|---|---|---|---|---|---|---|

| time | ||||||

| 2013-10-07 10:00:00 | 484.543953 | 102.325581 | 102.325581 | 485.881163 | 141.860465 | 141.860465 |

| 2013-10-07 10:05:00 | 484.252174 | 121.739130 | 121.739130 | 485.391739 | 126.086957 | 126.086957 |

| 2013-10-07 10:10:00 | 483.835714 | 157.142857 | 157.142857 | 484.905714 | 100.000000 | 100.000000 |

| 2013-10-07 10:15:00 | 484.132500 | 225.000000 | 225.000000 | 484.880000 | 100.000000 | 100.000000 |

| 2013-10-07 10:20:00 | 484.200588 | 129.411765 | 129.411765 | 484.824706 | 105.882353 | 105.882353 |

Let's have a graphical look at the new data set.

AAPL_5min['bid'].plot()

<matplotlib.axes.AxesSubplot at 0x10b6a2dd0>

Obvioulsly, there hasn't been trading data for every interval.

pandas has a number of approaches to fill in missing data.

AAPL_5min['bid'].fillna(method='ffill').plot() # this forward fills missing data

<matplotlib.axes.AxesSubplot at 0x108bed490>

Analyzing Non-Financial Real-World Data¶

The Example and First Analyses¶

This example analyzes the frequency of bikes on 7 different bike paths in Montreal.

The data is from the Web site Bike Data.

Thanks to Julia Evans for providing this example (cf. Julia's Notebook).

After having downloaded and extracted the data zip file from the source, we can read the data for 2012 with pandas into a DataFrame.

bikes = pd.read_csv('./Data/2012.csv', encoding='latin1', sep=';', index_col='Date', parse_dates=True, dayfirst=True)

bikes.head()

| Berri 1 | Brébeuf (données non disponibles) | Côte-Sainte-Catherine | Maisonneuve 1 | Maisonneuve 2 | du Parc | Pierre-Dupuy | Rachel1 | St-Urbain (données non disponibles) | |

|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||

| 2012-01-01 | 35 | NaN | 0 | 38 | 51 | 26 | 10 | 16 | NaN |

| 2012-01-02 | 83 | NaN | 1 | 68 | 153 | 53 | 6 | 43 | NaN |

| 2012-01-03 | 135 | NaN | 2 | 104 | 248 | 89 | 3 | 58 | NaN |

| 2012-01-04 | 144 | NaN | 1 | 116 | 318 | 111 | 8 | 61 | NaN |

| 2012-01-05 | 197 | NaN | 2 | 124 | 330 | 97 | 13 | 95 | NaN |

We want to get rid of those columns without data.

bikes = bikes.dropna(axis=1) # delete empty ('NaN') columns

bikes # the DataFrame object

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 310 entries, 2012-01-01 00:00:00 to 2012-11-05 00:00:00 Data columns (total 7 columns): Berri 1 310 non-null values Côte-Sainte-Catherine 310 non-null values Maisonneuve 1 310 non-null values Maisonneuve 2 310 non-null values du Parc 310 non-null values Pierre-Dupuy 310 non-null values Rachel1 310 non-null values dtypes: int64(7)

Let's see how the first three different paths are used by bikers.

rcParams['figure.figsize'] = (7, 7) # enlarge figure size

bikes[bikes.columns[:3]].plot(subplots=True, grid=True, color='b')

# plot the first three columns of the DataFrame

array([<matplotlib.axes.AxesSubplot object at 0x108bfbe90>,

<matplotlib.axes.AxesSubplot object at 0x108cc60d0>,

<matplotlib.axes.AxesSubplot object at 0x108ce6c90>], dtype=object)

pandas provides a number of convenience functions/methods to generate meta data for DataFrame objects.

np.round(bikes.describe(), 1)

| Berri 1 | Côte-Sainte-Catherine | Maisonneuve 1 | Maisonneuve 2 | du Parc | Pierre-Dupuy | Rachel1 | |

|---|---|---|---|---|---|---|---|

| count | 310.0 | 310.0 | 310.0 | 310.0 | 310.0 | 310.0 | 310.0 |

| mean | 2985.0 | 1233.4 | 1983.3 | 3510.3 | 1863.0 | 1054.3 | 2873.5 |

| std | 2169.3 | 944.6 | 1450.7 | 2485.0 | 1332.5 | 1064.0 | 2039.3 |

| min | 32.0 | 0.0 | 33.0 | 47.0 | 18.0 | 0.0 | 0.0 |

| 25% | 596.0 | 243.2 | 427.0 | 831.0 | 474.8 | 53.2 | 731.0 |

| 50% | 3128.0 | 1269.0 | 2019.5 | 3688.5 | 1822.5 | 704.0 | 3223.5 |

| 75% | 4973.2 | 2003.0 | 3168.2 | 5731.8 | 3069.0 | 1818.5 | 4717.2 |

| max | 7077.0 | 3124.0 | 4999.0 | 8222.0 | 4510.0 | 4386.0 | 6595.0 |

Which one is overall the most popular bike path?

bikes.sum().plot(kind='barh', grid=True)

<matplotlib.axes.AxesSubplot at 0x108c1dc90>

Grouping and Aggregation¶

You often have to group data sets and aggregate data values accordingly. In this case, it is natural to ask for the different frequencies of bikes for different weekdays. pandas can handle such tasks really well. First, we add a weekday column to the DataFrame object.

bikes['weekday'] = bikes.index.weekday

bikes.tail()

| Berri 1 | Côte-Sainte-Catherine | Maisonneuve 1 | Maisonneuve 2 | du Parc | Pierre-Dupuy | Rachel1 | weekday | |

|---|---|---|---|---|---|---|---|---|

| Date | ||||||||

| 2012-11-01 | 2405 | 1208 | 1701 | 3082 | 2076 | 165 | 2461 | 3 |

| 2012-11-02 | 1582 | 737 | 1109 | 2277 | 1392 | 97 | 1888 | 4 |

| 2012-11-03 | 844 | 380 | 612 | 1137 | 713 | 105 | 1302 | 5 |

| 2012-11-04 | 966 | 446 | 710 | 1277 | 692 | 197 | 1374 | 6 |

| 2012-11-05 | 2247 | 1170 | 1705 | 3221 | 2143 | 179 | 2430 | 0 |

Now, we can use this column for grouping operations.

day_grouping = bikes.groupby('weekday').mean()

day_grouping.index = ['Mon', 'Tue', 'Wed', 'Thu', 'Fri', 'Sat', 'Sun']

np.round(day_grouping)

| Berri 1 | Côte-Sainte-Catherine | Maisonneuve 1 | Maisonneuve 2 | du Parc | Pierre-Dupuy | Rachel1 | |

|---|---|---|---|---|---|---|---|

| Mon | 2984 | 1341 | 2001 | 3639 | 2004 | 1027 | 2892 |

| Tue | 3075 | 1334 | 2092 | 3770 | 2077 | 799 | 2729 |

| Wed | 3477 | 1531 | 2384 | 4229 | 2321 | 983 | 3025 |

| Thu | 3639 | 1569 | 2543 | 4471 | 2402 | 1031 | 3187 |

| Fri | 3222 | 1283 | 2240 | 3918 | 2043 | 965 | 3119 |

| Sat | 2309 | 773 | 1411 | 2388 | 1097 | 1201 | 2564 |

| Sun | 2207 | 810 | 1229 | 2185 | 1111 | 1366 | 2603 |

Which is the most popular day for biking? We generate a stacked bar plot to derive the answer graphically.

day_grouping.plot(kind='bar', stacked=True, legend=False)

<matplotlib.axes.AxesSubplot at 0x10b9cf750>

Obviously, Thursday wins the race.

Combining Two Data Sets¶

We next want to see which role the weather plays in explaining biker frequency in each month. To collect weather data we use a function provided in Julia Evans' Notebook.

# cf. http://nbviewer.ipython.org/urls/raw.github.com/jvns/talks/master/pyconca2013/pistes-cyclables.ipynb

def get_weather_data(year):

url1 = "http://climate.weather.gc.ca/climateData/"

url2 = "bulkdata_e.html?format=csv&stationID=5415"

url3 = "&Year={year}&Month={month}&timeframe=1&submit=Download+Data"

url_template = url1 + url2 + url3

# mctavish station: 10761, airport station: 5415

data_by_month = []

for month in range(1, 13):

url = url_template.format(year=year, month=month)

weather_data = pd.read_csv(url, skiprows=16, index_col='Date/Time',

parse_dates=True).dropna(axis=1)

weather_data.columns = map(lambda x: x.replace('\xb0', ''),

weather_data.columns)

weather_data = weather_data.drop(['Year', 'Day', 'Month', 'Time',

'Data Quality'], axis=1)

data_by_month.append(weather_data.dropna())

# Concatenate and drop any empty columns

return pd.concat(data_by_month).dropna(axis=1, how='all').dropna()

Let's retrieve the weather data for 2012.

try:

h5 = pd.HDFStore('./data/weather.h5', 'r')

weather = h5['weather']

h5.close()

except:

weather = get_weather_data(2012)

try:

h5 = pd.HDFStore('./data/weather.h5', 'w')

h5['weather'] = weather

h5.close()

except:

pass

weather.head()

| Temp (C) | Dew Point Temp (C) | Rel Hum (%) | Wind Spd (km/h) | Visibility (km) | Stn Press (kPa) | Weather | |

|---|---|---|---|---|---|---|---|

| Date/Time | |||||||

| 2012-01-01 00:00:00 | -1.8 | -3.9 | 86 | 4 | 8.0 | 101.24 | Fog |

| 2012-01-01 01:00:00 | -1.8 | -3.7 | 87 | 4 | 8.0 | 101.24 | Fog |

| 2012-01-01 02:00:00 | -1.8 | -3.4 | 89 | 7 | 4.0 | 101.26 | Freezing Drizzle,Fog |

| 2012-01-01 03:00:00 | -1.5 | -3.2 | 88 | 6 | 4.0 | 101.27 | Freezing Drizzle,Fog |

| 2012-01-01 04:00:00 | -1.5 | -3.3 | 88 | 7 | 4.8 | 101.23 | Fog |

Now, we can resample the time series data sets to look for correlation between e.g. the average temperature and the average bike frequency.

interval = '1m' # resampling period, e.g. 1 month

bikes_int = bikes.resample(rule=interval, how='sum') # bike frequency per interval

del bikes_int['weekday']

bikes_int.tail()

| Berri 1 | Côte-Sainte-Catherine | Maisonneuve 1 | Maisonneuve 2 | du Parc | Pierre-Dupuy | Rachel1 | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2012-07-31 | 162562 | 59657 | 101969 | 182673 | 88010 | 80033 | 150703 |

| 2012-08-31 | 149227 | 61589 | 95110 | 168556 | 84261 | 57583 | 137377 |

| 2012-09-30 | 127061 | 57986 | 83540 | 147277 | 86611 | 42328 | 123757 |

| 2012-10-31 | 94793 | 45366 | 63551 | 115360 | 71607 | 19746 | 93959 |

| 2012-11-30 | 8044 | 3941 | 5837 | 10994 | 7016 | 743 | 9455 |

We do the same with the temperature data.

temp_int = weather['Temp (C)'].resample(rule=interval, how='mean')

np.round(temp_int.tail(), 1)

Date/Time 2012-08-31 22.3 2012-09-30 16.5 2012-10-31 11.0 2012-11-30 0.9 2012-12-31 -3.3 Freq: M, dtype: float64

for col in bikes_int.columns:

cor = bikes_int[col].corr(temp_int)

print "Correlation av. %22s path bike frequency and av. temp. %4.3f" % (col, cor)

Correlation av. Berri 1 path bike frequency and av. temp. 0.979 Correlation av. Côte-Sainte-Catherine path bike frequency and av. temp. 0.973 Correlation av. Maisonneuve 1 path bike frequency and av. temp. 0.974 Correlation av. Maisonneuve 2 path bike frequency and av. temp. 0.978 Correlation av. du Parc path bike frequency and av. temp. 0.964 Correlation av. Pierre-Dupuy path bike frequency and av. temp. 0.942 Correlation av. Rachel1 path bike frequency and av. temp. 0.979

We briefly check graphically if we can trust our numerical results.

new_df = pd.DataFrame({'Bikes': bikes_int['Berri 1'] , 'Temp': temp_int})

new_df = (new_df - new_df.mean()) / new_df.mean() * 100

new_df.plot(grid=True)

<matplotlib.axes.AxesSubplot at 0x10bb640d0>

Why Python for Data Analytics & Visualization?¶

10 years ago, Python was considered exotic in the analytics space – at best. Languages/packages like R and Matlab dominated the scene. Today, Python has become a major force in data analytics & visualization due to a number of characteristics:

- multi-purpose: prototyping, development, production, sytems administration – Python is one for all

- libraries: there is a library for almost any task or problem you face

- efficiency: Python speeds up all IT development tasks for analytics applications and reduces maintenance costs

- performance: Python has evolved from a scripting language to a 'meta' language with bridges to all high performance environments (e.g. LLVM, multi-core CPUs, GPUs, clusters)

- interoperalbility: Python seamlessly integrates with almost any other language and technology

- interactivity: Python allows domain experts to get closer to their business and financial data pools and to do real-time analytics

- collaboration: solutions like Wakari with IPython Notebook allow the easy sharing of code, data, results, graphics, etc.

One of the easiest ways to deploy Python today across a whole organization with a heterogenous IT infrastructure is via Wakari, Continuum's Web-/Browser- and Python-based Data Analytics environment. It is availble both as a cloud as well as an enterprise solution for in-house deployment.

The Python Quants GmbH – Python Data Exploration & Visualization¶

The Python Quants – the company Web site

Dr. Yves J. Hilpisch – my personal Web site

Derivatives Analytics with Python – my new book

Read an Excerpt and Order the Book

Contact Us

# Autosave Function for IPyton Notebook

def autosave(interval=3):

"""Autosave the notebook every interval (in minutes)"""

from IPython.core.display import Javascript

interval *= 60 * 1000 # JS wants intervals in miliseconds

tpl = 'setInterval ( "IPython.notebook.save_notebook()", %i );'

return Javascript(tpl % interval)

autosave()

<IPython.core.display.Javascript at 0x10bfb5a10>