A Better Future with Python¶

Dr. Yves J. Hilpisch

The Python Quants GmbH

Pycon Ireland, Dublin, 12. October 2013

About Me¶

A brief bio:

- Managing Partner of The Python Quants

- Founder of Visixion GmbH – The Python Quants

- Lecturer Mathematical Finance at Saarland University

- Focus on Financial Industry and Financial Analytics

- Book (2013) "Derivatives Analytics with Python"

- Book project "Python for Finance" with O'Reilly

- Dr.rer.pol in Mathematical Finance

- Graduate in Business Administration

- Martial Arts Practitioner and Fan

See www.hilpisch.com

Better Future¶

Progress¶

How can economic and/or social progress be measured?

- Efficiency – use less resources for a given output

- Productivity – generate more output from given resources

- Quality – create something new

Language¶

"It is argued that language plays an active role in the development of scientific ideas. ...

And is mathematics somehow more or less than a language? ...

A particular characteristic of mathematics which appears in one aspect to differentiate it from language is its appeal to visual thinking."

Alan Ford and F. David Peat (1988): "The Role of Language in Science."

"As I gradually improved my in-code documentation, I realized that English is a natural language, but computer languages, regardless of how well we use them, are still 'code.' Communication via natural language is a relatively quick and efficient process. Not so with computer languages: They must be 'decoded' for efficient human understanding."

David Zokaities (2004): "Writing Understandable Code".

Today's trinity of scientifc languages:

- the spoken/written language – in general English

- the formal language – logic, mathematics, etc.

- the programming language – Python, for example

Python seems to be the natural completion of the scientific language stack:

English

"Calculate the inner values of a call option at maturity with strike of 105 for 10,000 simulated prices of stock S."

Mathematics

Python/NumPy

S0 =100

K = 105

I = 10000

ST = S0 * standard_normal(I)

h = maximum(ST - K, 0)

The whole procedure can be made even more expressive.

stock_price = ST

strike_price = K

payoff_func = 'maximum(strike_price - stock_price, 0)' # put option

h = eval(payoff_func)

Scientific publishing has been revolutionized by Latex and its derivatives through combining

- word processing with

- mathematical formula rendering capabilities

The researcher can by herself/himself generate publication-ready output in a highly customizable fashion.

Then, when the need came up,

- syntax highlighting capabilities

were added (e.g. for Python)

Nowadays, the tools available in the Python space take all this one step further.

- Sphinx

- IPython Notebook

- PythonTEX

What exactly does this mean?

- Executable Code as part of the document sources for automatic inclusion of the code's results.

- Open Research with reproducable results for everyone interested.

- Higher Efficiency, Productivity, Quality

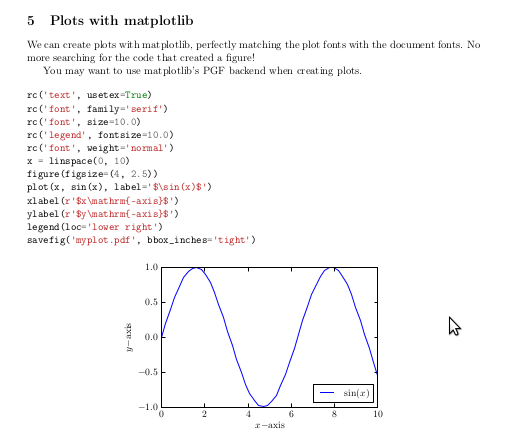

# \section{Plots with matplotlib}

# We can create plots with matplotlib, perfectly matching the plot fonts with the document fonts.

# No more searching for the code that created a figure!

# You may want to use matplotlib's PGF backend when creating plots.

# \begin{pylabblock}

# rc('text', usetex=True)

# rc('font', family='serif')

# rc('font', size=10.0)

# rc('legend', fontsize=10.0)

# rc('font', weight='normal')

# x = linspace(0, 10)

# figure(figsize=(4, 2.5))

# plot(x, sin(x), label='$\sin(x)$')

# xlabel(r'$x\mathrm{-axis}$')

# ylabel(r'$y\mathrm{-axis}$')

# legend(loc='lower right')

# savefig('myplot.pdf', bbox_inches='tight')

# \end{pylabblock}

# \begin{center}

# \includegraphics{myplot}

# \end{center}

Source www.github.com.

Source www.github.com.

Efficiency¶

To reach a given goal with minimal resources.

Genome Sequencing¶

Using Python to generate faster research results.

"Saturday, June 22, 2013

Python for Next-Generation Sequencing

... a story about how a challenging genome sequencing project demonstrated once again, what an awesome tool Python can be ...

Here are the results from one million regular expression searches of a genome of 250 million bases:

- Number of matches found in sequence = 61,226

- 1,000,000 searches completed in 0.671034 seconds

- Mean search time = 6.71034e-07 seconds

Fast! ...

Like much of the Python Standard Library, the re module that handles regular expressions is actually written in C, so while the initial function call to the search may be handled by the Python interpreter, the subsequent search is actually being run in compiled native code, which explains its efficiency."

Source www.digitalbiologist.com.

Just-in-Time Compiling¶

Reducing execution time.

Let's start with pure Python.

def f(m, n):

total = 0.0

for i in range(m):

for j in range (n):

total += sin(i * j)

return round(total, 2)

m = 1000

n = m

%time f(m, n)

CPU times: user 3.62 s, sys: 11.4 ms, total: 3.63 s Wall time: 3.65 s

72.55

The NumPy solution.

def f_np(m, n):

v1 = resize(arange(m), (n, m))

v2 = resize(arange(n), (m, n))

v = v1 * v2.T

return round(sum(sin(v)), 2)

%time f_np(m, n)

CPU times: user 46.6 ms, sys: 22.2 ms, total: 68.8 ms Wall time: 68.4 ms

72.55

The Numba compiled version.

import numba

f_jit = numba.autojit(f)

f_jit(n, m)

# The LLVM code output:

DEBUG -- translate:361:translate

; ModuleID = 'tmp.module.__main__.f.1059bbc80'

@PyArray_API = linkonce_odr global i8** inttoptr (i64 4324895424 to i8**)

define double @__numba_specialized_0___main___2E_f(i32 %m, i32 %n) {

entry:

%objtemp5 = alloca { i64, i8* }*

store { i64, i8* }* null, { i64, i8* }** %objtemp5, !tbaa !6

%objtemp4 = alloca { i64, i8* }*

store { i64, i8* }* null, { i64, i8* }** %objtemp4, !tbaa !6

%objtemp3 = alloca { i64, i8* }*

store { i64, i8* }* null, { i64, i8* }** %objtemp3, !tbaa !6

%objtemp = alloca { i64, i8* }*

store { i64, i8* }* null, { i64, i8* }** %objtemp, !tbaa !6

%nsteps2 = alloca i64

%target_temp1 = alloca i64

%nsteps = alloca i64

%target_temp = alloca i64

%return_value = alloca double

store i64 0, i64* %target_temp, !tbaa !2

%0 = sext i32 %m to i64

store i64 %0, i64* %nsteps, !tbaa !3

br label %"for_condition_3:13"

cleanup_label: ; preds = %"no_error_6:178", %error_label

%1 = load { i64, i8* }** %objtemp4, !tbaa !6

call void @Py_XDECREF({ i64, i8* }* %1)

%2 = load { i64, i8* }** %objtemp5, !tbaa !6

call void @Py_XDECREF({ i64, i8* }* %2)

%3 = load { i64, i8* }** %objtemp3, !tbaa !6

call void @Py_XDECREF({ i64, i8* }* %3)

%4 = load { i64, i8* }** %objtemp, !tbaa !6

call void @Py_XDECREF({ i64, i8* }* %4)

%5 = load double* %return_value

ret double %5

error_label: ; preds = %"no_error_6:177", %"no_error_6:176", %"no_error_6:17", %"exit_for_3:4"

store double 0x7FF8000000000000, double* %return_value

br label %cleanup_label

"for_condition_3:13": ; preds = %entry, %"exit_for_4:8"

%total_2 = phi double [ 0.000000e+00, %entry ], [ %total_3, %"exit_for_4:8" ]

%j_1 = phi i64 [ 123456789, %entry ], [ %j_2, %"exit_for_4:8" ]

%6 = load i64* %target_temp, !tbaa !2

%7 = load i64* %nsteps, !tbaa !3

%8 = icmp slt i64 %6, %7

%9 = icmp ne i1 %8, false

br i1 %9, label %"loop_body_4:8", label %"exit_for_3:4"

"exit_for_3:4": ; preds = %"for_condition_3:13"

%10 = call { i64, i8* }* @PyFloat_FromDouble(double %total_2)

store { i64, i8* }* %10, { i64, i8* }** %objtemp4, !tbaa !6

%11 = ptrtoint { i64, i8* }* %10 to i64

%12 = icmp eq i64 %11, 0

br i1 %12, label %error_label, label %"no_error_6:17"

"loop_body_4:8": ; preds = %"for_condition_3:13"

%13 = load i64* %target_temp, !tbaa !2

%14 = load i64* %target_temp, !tbaa !2

%15 = add i64 %14, 1

store i64 %15, i64* %target_temp, !tbaa !2

store i64 0, i64* %target_temp1, !tbaa !4

%16 = sext i32 %n to i64

store i64 %16, i64* %nsteps2, !tbaa !5

br label %"for_condition_4:17"

"for_condition_4:17": ; preds = %"loop_body_4:8", %"loop_body_5:21"

%total_3 = phi double [ %27, %"loop_body_5:21" ], [ %total_2, %"loop_body_4:8" ]

%j_2 = phi i64 [ %21, %"loop_body_5:21" ], [ %j_1, %"loop_body_4:8" ]

%17 = load i64* %target_temp1, !tbaa !4

%18 = load i64* %nsteps2, !tbaa !5

%19 = icmp slt i64 %17, %18

%20 = icmp ne i1 %19, false

br i1 %20, label %"loop_body_5:21", label %"exit_for_4:8"

"exit_for_4:8": ; preds = %"for_condition_4:17"

br label %"for_condition_3:13"

"loop_body_5:21": ; preds = %"for_condition_4:17"

%21 = load i64* %target_temp1, !tbaa !4

%22 = load i64* %target_temp1, !tbaa !4

%23 = add i64 %22, 1

store i64 %23, i64* %target_temp1, !tbaa !4

%24 = mul i64 %13, %21

%25 = sitofp i64 %24 to double

%26 = call double @"numba.math.['double'].sin"(double %25)

%27 = fadd double %total_3, %26

br label %"for_condition_4:17"

"no_error_6:17": ; preds = %"exit_for_3:4"

%28 = load { i64, i8* }** %objtemp4, !tbaa !6

%29 = call { i64, i8* }* inttoptr (i64 4488711476 to { i64, i8* }* (i64)*)(i64 2)

store { i64, i8* }* %29, { i64, i8* }** %objtemp5, !tbaa !6

%30 = ptrtoint { i64, i8* }* %29 to i64

%31 = icmp eq i64 %30, 0

br i1 %31, label %error_label, label %"no_error_6:176"

"no_error_6:176": ; preds = %"no_error_6:17"

%32 = load { i64, i8* }** %objtemp5, !tbaa !6

%33 = call { i64, i8* }* (i64, ...)* @PyTuple_Pack(i64 2, { i64, i8* }* %28, { i64, i8* }* %32)

store { i64, i8* }* %33, { i64, i8* }** %objtemp3, !tbaa !6

%34 = ptrtoint { i64, i8* }* %33 to i64

%35 = icmp eq i64 %34, 0

br i1 %35, label %error_label, label %"no_error_6:177"

"no_error_6:177": ; preds = %"no_error_6:176"

%36 = load { i64, i8* }** %objtemp3, !tbaa !6

%37 = call { i64, i8* }* @PyObject_Call({ i64, i8* }* inttoptr (i64 4297723128 to { i64, i8* }*), { i64, i8* }* %36, { i64, i8* }* null)

store { i64, i8* }* %37, { i64, i8* }** %objtemp, !tbaa !6

%38 = ptrtoint { i64, i8* }* %37 to i64

%39 = icmp eq i64 %38, 0

br i1 %39, label %error_label, label %"no_error_6:178"

"no_error_6:178": ; preds = %"no_error_6:177"

%40 = load { i64, i8* }** %objtemp, !tbaa !6

%41 = call double @PyFloat_AsDouble({ i64, i8* }* %40)

store double %41, double* %return_value

br label %cleanup_label

}

declare double @PyFloat_AsDouble({ i64, i8* }*)

declare { i64, i8* }* @PyTuple_Pack(i64, ...)

declare { i64, i8* }* @PyFloat_FromDouble(double)

declare { i64, i8* }* @Py_BuildValue(i8*, ...)

declare i32 @PyArg_ParseTuple({ i64, i8* }*, i8*, ...)

declare void @PyErr_Clear()

declare double @"numba.math.['double'].sin"(double)

declare void @Py_XDECREF({ i64, i8* }*)

declare { i64, i8* }* @PyObject_Call({ i64, i8* }*, { i64, i8* }*, { i64, i8* }*)

!tbaa = !{!0, !1, !2, !3, !4, !5, !6}

!0 = metadata !{metadata !"root"}

!1 = metadata !{metadata !"char *", metadata !0}

!2 = metadata !{metadata !"unique0", metadata !1}

!3 = metadata !{metadata !"unique1", metadata !1}

!4 = metadata !{metadata !"unique2", metadata !1}

!5 = metadata !{metadata !"unique3", metadata !1}

!6 = metadata !{metadata !"object", metadata !1}

DEBUG -- translate:361:translate

; ModuleID = 'numba_executable_module'

@PyArray_API = linkonce_odr global i8** inttoptr (i64 4324895424 to i8**)

define void @Py_INCREF({ i64, i8* }* %obj) {

decl:

%obj1 = alloca { i64, i8* }*

store { i64, i8* }* %obj, { i64, i8* }** %obj1

%0 = bitcast { i64, i8* }* %obj to i64*

%1 = load i64* %0

%2 = add i64 %1, 1

store i64 %2, i64* %0

ret void

}

define void @Py_DECREF({ i64, i8* }* %obj) {

decl:

%obj1 = alloca { i64, i8* }*

store { i64, i8* }* %obj, { i64, i8* }** %obj1

%0 = bitcast { i64, i8* }* %obj to i64*

%1 = load i64* %0

%2 = icmp sgt i64 %1, 1

br i1 %2, label %if.then, label %if.else

if.then: ; preds = %decl

%3 = bitcast { i64, i8* }* %obj to i64*

%4 = add i64 %1, -1

store i64 %4, i64* %3

br label %if.end

if.else: ; preds = %decl

call void @Py_DecRef({ i64, i8* }* %obj)

br label %if.end

if.end: ; preds = %if.else, %if.then

ret void

}

declare void @Py_DecRef({ i64, i8* }*)

define void @Py_XINCREF({ i64, i8* }* %obj) {

decl:

%obj1 = alloca { i64, i8* }*

store { i64, i8* }* %obj, { i64, i8* }** %obj1

%0 = ptrtoint { i64, i8* }* %obj to i64

%1 = icmp ne i64 %0, 0

br i1 %1, label %if.then, label %if.end

if.then: ; preds = %decl

%2 = bitcast { i64, i8* }* %obj to i64*

%3 = load i64* %2

%4 = add i64 %3, 1

store i64 %4, i64* %2

br label %if.end

if.end: ; preds = %if.then, %decl

ret void

}

define void @Py_XDECREF({ i64, i8* }* %obj) {

decl:

%obj1 = alloca { i64, i8* }*

store { i64, i8* }* %obj, { i64, i8* }** %obj1

%0 = ptrtoint { i64, i8* }* %obj to i64

%1 = icmp ne i64 %0, 0

br i1 %1, label %if.then, label %if.end

if.then: ; preds = %decl

call void @Py_DECREF({ i64, i8* }* %obj)

br label %if.end

if.end: ; preds = %if.then, %decl

ret void

}

define i8* @IndexAxis(i8* %data, i64* %in_shape, i64* %in_strides, i64 %src_dim, i64 %index) {

decl:

%data1 = alloca i8*

%in_shape2 = alloca i64*

%in_strides3 = alloca i64*

%src_dim4 = alloca i64

%index5 = alloca i64

%result = alloca i8*

store i8* %data, i8** %data1

store i64* %in_shape, i64** %in_shape2

store i64* %in_strides, i64** %in_strides3

store i64 %src_dim, i64* %src_dim4

store i64 %index, i64* %index5

%0 = load i64** %in_strides3

%1 = load i64* %src_dim4

%2 = getelementptr inbounds i64* %0, i64 %1

%3 = load i64* %2

%4 = mul i64 %3, %index

%5 = load i8** %data1

%6 = getelementptr inbounds i8* %5, i64 %4

store i8* %6, i8** %result

ret i8* %6

}

define void @NewAxis(i64* %out_shape, i64* %out_strides, i32 %dst_dim) {

decl:

%out_shape1 = alloca i64*

%out_strides2 = alloca i64*

%dst_dim3 = alloca i32

store i64* %out_shape, i64** %out_shape1

store i64* %out_strides, i64** %out_strides2

store i32 %dst_dim, i32* %dst_dim3

%0 = load i64** %out_shape1

%1 = getelementptr inbounds i64* %0, i32 %dst_dim

store i64 1, i64* %1

%2 = load i64** %out_strides2

%3 = load i32* %dst_dim3

%4 = getelementptr inbounds i64* %2, i32 %3

store i64 0, i64* %4

ret void

}

define i32 @Broadcast(i64* %dst_shape, i64* %src_shape, i64* %src_strides, i32 %max_ndim, i32 %ndim) {

decl:

%dst_shape1 = alloca i64*

%src_shape2 = alloca i64*

%src_strides3 = alloca i64*

%max_ndim4 = alloca i32

%ndim5 = alloca i32

%0 = alloca i32

store i64* %dst_shape, i64** %dst_shape1

store i64* %src_shape, i64** %src_shape2

store i64* %src_strides, i64** %src_strides3

store i32 %max_ndim, i32* %max_ndim4

store i32 %ndim, i32* %ndim5

%1 = load i32* %max_ndim4

%2 = sub i32 %1, %ndim

store i32 0, i32* %0

br label %loop.cond

loop.cond: ; preds = %if.end11, %decl

%3 = load i32* %0

%4 = load i32* %ndim5

%5 = icmp slt i32 %3, %4

br i1 %5, label %loop.body, label %loop.end

loop.body: ; preds = %loop.cond

%6 = load i64** %src_shape2

%7 = getelementptr inbounds i64* %6, i32 %3

%8 = add i32 %3, %2

%9 = load i64** %dst_shape1

%10 = getelementptr inbounds i64* %9, i32 %8

%11 = load i64* %7

%12 = icmp eq i64 %11, 1

br i1 %12, label %if.then, label %if.else

loop.end: ; preds = %if.else7, %loop.cond

%merge = phi i32 [ 1, %loop.cond ], [ 0, %if.else7 ]

ret i32 %merge

if.then: ; preds = %loop.body

%13 = load i64** %src_strides3

%14 = getelementptr inbounds i64* %13, i32 %3

store i64 0, i64* %14

br label %if.end11

if.else: ; preds = %loop.body

%15 = load i64* %10

%16 = icmp eq i64 %15, 1

br i1 %16, label %if.then6, label %if.else7

if.then6: ; preds = %if.else

store i64 %11, i64* %10

br label %if.end11

if.else7: ; preds = %if.else

%17 = icmp ne i64 %11, %15

br i1 %17, label %loop.end, label %if.end11

if.end11: ; preds = %if.else7, %if.then6, %if.then

%18 = load i32* %0

%19 = add i32 %18, 1

store i32 %19, i32* %0

br label %loop.cond

}

define double @__numba_specialized_0___main___2E_f(i32 %m, i32 %n) {

entry:

%0 = icmp sgt i32 %m, 0

br i1 %0, label %"loop_body_4:8.lr.ph", label %"exit_for_3:4"

"loop_body_4:8.lr.ph": ; preds = %entry

%1 = icmp sgt i32 %n, 0

br i1 %1, label %"loop_body_4:8.lr.ph.split.us", label %"loop_body_4:8.lr.ph.loop_body_4:8.lr.ph.split_crit_edge"

"loop_body_4:8.lr.ph.loop_body_4:8.lr.ph.split_crit_edge": ; preds = %"loop_body_4:8.lr.ph"

%2 = sext i32 %m to i64

br label %"for_condition_3:13.loopexit"

"loop_body_4:8.lr.ph.split.us": ; preds = %"loop_body_4:8.lr.ph"

%3 = sext i32 %m to i64

%4 = sext i32 %n to i64

br label %"loop_body_5:21.lr.ph.us"

"loop_body_5:21.us": ; preds = %"loop_body_5:21.us", %"loop_body_5:21.lr.ph.us"

%lsr.iv2 = phi i64 [ %lsr.iv.next3, %"loop_body_5:21.us" ], [ %4, %"loop_body_5:21.lr.ph.us" ]

%lsr.iv = phi i64 [ %lsr.iv.next, %"loop_body_5:21.us" ], [ 0, %"loop_body_5:21.lr.ph.us" ]

%total_318.us = phi double [ %total_219.us, %"loop_body_5:21.lr.ph.us" ], [ %7, %"loop_body_5:21.us" ]

%5 = sitofp i64 %lsr.iv to double

%6 = tail call double @"numba.math.['double'].sin"(double %5)

%7 = fadd double %total_318.us, %6

%lsr.iv.next = add i64 %lsr.iv, %8

%lsr.iv.next3 = add i64 %lsr.iv2, -1

%exitcond21 = icmp eq i64 %lsr.iv.next3, 0

br i1 %exitcond21, label %"for_condition_3:13.loopexit.us", label %"loop_body_5:21.us"

"for_condition_3:13.loopexit.us": ; preds = %"loop_body_5:21.us"

%exitcond22 = icmp eq i64 %9, %3

br i1 %exitcond22, label %"exit_for_3:4", label %"loop_body_5:21.lr.ph.us"

"loop_body_5:21.lr.ph.us": ; preds = %"loop_body_4:8.lr.ph.split.us", %"for_condition_3:13.loopexit.us"

%total_219.us = phi double [ 0.000000e+00, %"loop_body_4:8.lr.ph.split.us" ], [ %7, %"for_condition_3:13.loopexit.us" ]

%8 = phi i64 [ 0, %"loop_body_4:8.lr.ph.split.us" ], [ %9, %"for_condition_3:13.loopexit.us" ]

%9 = add i64 %8, 1

br label %"loop_body_5:21.us"

cleanup_label: ; preds = %"exit_for_3:4", %"no_error_6:17", %"no_error_6:176", %"no_error_6:177", %"no_error_6:178"

%objtemp5.0.load1417 = phi { i64, i8* }* [ %12, %"no_error_6:178" ], [ %12, %"no_error_6:177" ], [ %12, %"no_error_6:176" ], [ null, %"no_error_6:17" ], [ null, %"exit_for_3:4" ]

%objtemp3.0.load1216 = phi { i64, i8* }* [ %14, %"no_error_6:178" ], [ %14, %"no_error_6:177" ], [ null, %"no_error_6:176" ], [ null, %"no_error_6:17" ], [ null, %"exit_for_3:4" ]

%objtemp.0.load1115 = phi { i64, i8* }* [ %16, %"no_error_6:178" ], [ null, %"no_error_6:177" ], [ null, %"no_error_6:176" ], [ null, %"no_error_6:17" ], [ null, %"exit_for_3:4" ]

%storemerge = phi double [ %18, %"no_error_6:178" ], [ 0x7FF8000000000000, %"no_error_6:177" ], [ 0x7FF8000000000000, %"no_error_6:176" ], [ 0x7FF8000000000000, %"no_error_6:17" ], [ 0x7FF8000000000000, %"exit_for_3:4" ]

tail call void @Py_XDECREF({ i64, i8* }* %10)

tail call void @Py_XDECREF({ i64, i8* }* %objtemp5.0.load1417)

tail call void @Py_XDECREF({ i64, i8* }* %objtemp3.0.load1216)

tail call void @Py_XDECREF({ i64, i8* }* %objtemp.0.load1115)

ret double %storemerge

"for_condition_3:13.loopexit": ; preds = %"loop_body_4:8.lr.ph.loop_body_4:8.lr.ph.split_crit_edge", %"for_condition_3:13.loopexit"

%lsr.iv4 = phi i64 [ %2, %"loop_body_4:8.lr.ph.loop_body_4:8.lr.ph.split_crit_edge" ], [ %lsr.iv.next5, %"for_condition_3:13.loopexit" ]

%lsr.iv.next5 = add i64 %lsr.iv4, -1

%exitcond = icmp eq i64 %lsr.iv.next5, 0

br i1 %exitcond, label %"exit_for_3:4", label %"for_condition_3:13.loopexit"

"exit_for_3:4": ; preds = %"for_condition_3:13.loopexit", %"for_condition_3:13.loopexit.us", %entry

%total_2.lcssa = phi double [ 0.000000e+00, %entry ], [ %7, %"for_condition_3:13.loopexit.us" ], [ 0.000000e+00, %"for_condition_3:13.loopexit" ]

%10 = tail call { i64, i8* }* @PyFloat_FromDouble(double %total_2.lcssa)

%11 = icmp eq { i64, i8* }* %10, null

br i1 %11, label %cleanup_label, label %"no_error_6:17"

"no_error_6:17": ; preds = %"exit_for_3:4"

%12 = tail call { i64, i8* }* inttoptr (i64 4488711476 to { i64, i8* }* (i64)*)(i64 2)

%13 = icmp eq { i64, i8* }* %12, null

br i1 %13, label %cleanup_label, label %"no_error_6:176"

"no_error_6:176": ; preds = %"no_error_6:17"

%14 = tail call { i64, i8* }* (i64, ...)* @PyTuple_Pack(i64 2, { i64, i8* }* %10, { i64, i8* }* %12)

%15 = icmp eq { i64, i8* }* %14, null

br i1 %15, label %cleanup_label, label %"no_error_6:177"

"no_error_6:177": ; preds = %"no_error_6:176"

%16 = tail call { i64, i8* }* @PyObject_Call({ i64, i8* }* inttoptr (i64 4297723128 to { i64, i8* }*), { i64, i8* }* %14, { i64, i8* }* null)

%17 = icmp eq { i64, i8* }* %16, null

br i1 %17, label %cleanup_label, label %"no_error_6:178"

"no_error_6:178": ; preds = %"no_error_6:177"

%18 = tail call double @PyFloat_AsDouble({ i64, i8* }* %16)

br label %cleanup_label

}

declare double @PyFloat_AsDouble({ i64, i8* }*)

declare { i64, i8* }* @PyTuple_Pack(i64, ...)

declare { i64, i8* }* @PyFloat_FromDouble(double)

declare double @"numba.math.['double'].sin"(double)

declare { i64, i8* }* @PyObject_Call({ i64, i8* }*, { i64, i8* }*, { i64, i8* }*)

define { i64, i8* }* @__numba_specialized_1_numba_2E_codegen_2E_llvmwrapper_2E___numba_wrapper_f(i8* %self, { i64, i8* }* %args) {

entry:

%objtemp = alloca { i64, i8* }*

store { i64, i8* }* null, { i64, i8* }** %objtemp, !tbaa !6

%0 = alloca { i64, i8* }*

%1 = alloca { i64, i8* }*

%return_value = alloca { i64, i8* }*

%2 = call i32 ({ i64, i8* }*, i8*, ...)* @PyArg_ParseTuple({ i64, i8* }* %args, i8* getelementptr inbounds ([3 x i8]* @__STR_0, i32 0, i32 0), { i64, i8* }** %1, { i64, i8* }** %0)

%3 = icmp eq i32 %2, 0

br i1 %3, label %cleanup.if.true, label %cleanup.if.end

cleanup_label: ; preds = %no_error, %error_label

%4 = load { i64, i8* }** %objtemp, !tbaa !6

call void @Py_XDECREF({ i64, i8* }* %4)

%5 = load { i64, i8* }** %return_value

ret { i64, i8* }* %5

error_label: ; preds = %empty7, %empty8, %empty5, %empty2, %cleanup.if.true

store { i64, i8* }* null, { i64, i8* }** %return_value

%6 = load { i64, i8* }** %return_value, !tbaa !6

call void @Py_XINCREF({ i64, i8* }* %6)

br label %cleanup_label

cleanup.if.true: ; preds = %entry

br label %error_label

cleanup.if.end: ; preds = %entry

%7 = load { i64, i8* }** %1

%8 = load { i64, i8* }** %0

%9 = call i32 inttoptr (i64 4488712338 to i32 ({ i64, i8* }*)*)({ i64, i8* }* %7)

br label %empty

empty: ; preds = %cleanup.if.end

%10 = call i8* @PyErr_Occurred()

%11 = ptrtoint i8* %10 to i64

%12 = icmp ne i64 %11, 0

br i1 %12, label %empty2, label %empty1

empty1: ; preds = %empty

%13 = call i32 inttoptr (i64 4488712338 to i32 ({ i64, i8* }*)*)({ i64, i8* }* %8)

br label %empty3

empty2: ; preds = %empty

br label %error_label

empty3: ; preds = %empty1

%14 = call i8* @PyErr_Occurred()

%15 = ptrtoint i8* %14 to i64

%16 = icmp ne i64 %15, 0

br i1 %16, label %empty5, label %empty4

empty4: ; preds = %empty3

%17 = call double @__numba_specialized_0___main___2E_f(i32 %9, i32 %13)

br label %empty6

empty5: ; preds = %empty3

br label %error_label

empty6: ; preds = %empty4

%18 = call i8* @PyErr_Occurred()

%19 = ptrtoint i8* %18 to i64

%20 = icmp ne i64 %19, 0

br i1 %20, label %empty8, label %empty7

empty7: ; preds = %empty6

%21 = call { i64, i8* }* @PyFloat_FromDouble(double %17)

store { i64, i8* }* %21, { i64, i8* }** %objtemp, !tbaa !6

%22 = ptrtoint { i64, i8* }* %21 to i64

%23 = icmp eq i64 %22, 0

br i1 %23, label %error_label, label %no_error

empty8: ; preds = %empty6

br label %error_label

no_error: ; preds = %empty7

%24 = load { i64, i8* }** %objtemp, !tbaa !6

store { i64, i8* }* %24, { i64, i8* }** %return_value

%25 = load { i64, i8* }** %return_value, !tbaa !6

call void @Py_XINCREF({ i64, i8* }* %25)

br label %cleanup_label

}

declare i8* @PyErr_Occurred()

declare { i64, i8* }* @Py_BuildValue(i8*, ...)

declare i32 @PyArg_ParseTuple({ i64, i8* }*, i8*, ...)

declare void @PyErr_Clear()

!tbaa = !{!0, !1, !2, !3, !4, !5, !6, !0, !1, !6}

!0 = metadata !{metadata !"root"}

!1 = metadata !{metadata !"char *", metadata !0}

!2 = metadata !{metadata !"unique0", metadata !1}

!3 = metadata !{metadata !"unique1", metadata !1}

!4 = metadata !{metadata !"unique2", metadata !1}

!5 = metadata !{metadata !"unique3", metadata !1}

!6 = metadata !{metadata !"object", metadata !1}

72.55

This version is even faster than NumPy version.

%time f_jit(n, m)

CPU times: user 22.5 ms, sys: 63 µs, total: 22.5 ms Wall time: 22.6 ms

72.55

Wait a minute: Although the NumPy solution is already quite fast and vectorization is often an advisable strategy, what about memory issues with "too large" arrays?

Future: Blaze – disk-based, possibly distributed, arrays for big data (think of a combination of NumPy, PyTables and Numba on steroids)

Productivity¶

To generate maximum output given your resources.

Parallel Execution of Code¶

Increasing the output given a certain algorithm and hardware.

The algorithm we (arbitrarily) choose is the Monte Carlo estimator for a European option in the Black-Scholes-Merton set-up.

$$dS_t = r S_t dt + \sigma S_t dZ_t$$

def BSM_MCS(S0):

''' Black-Scholes-Merton MCS Estimator for European Calls. '''

import numpy as np

K = 100.; T = 1.0; r = 0.05; vola = 0.2

M = 50; I = 20000

dt = T / M

rand = np.random.standard_normal((M + 1, I))

S = np.zeros((M + 1, I)); S[0] = S0

for t in range(1, M + 1):

S[t] = S[t-1] * np.exp((r - 0.5 * vola ** 2) * dt + vola * np.sqrt(dt) * rand[t])

BSM_MCS_Value = np.sum(np.maximum(S[-1] - K, 0)) / I

return BSM_MCS_Value

The benchmark case of sequential execution.

from time import time

n = 100

def seqValue():

optionValues = []

for S in linspace(80, 100, n):

optionValues.append(BSM_MCS(S))

return optionValues

t0 = time()

seqValue()

t1 = time(); d1 = t1 - t0

print "Number of Options %8d" % n

print "Duration in Seconds %8.3f" % d1

print "Options per Second %8.3f" % (n / d1)

Number of Options 100 Duration in Seconds 6.276 Options per Second 15.933

We initialize the parallel execution infrastructure.

# shell: ipcluster start -n 4 # 2 cores, 4 threads

from IPython.parallel import Client

cluster_profile = "default"

c = Client(profile=cluster_profile)

view = c.load_balanced_view()

The function for the parallel execution of a number of different options valuations.

def parValue():

optionValues = []

for S in linspace(80, 100, n):

value = view.apply_async(BSM_MCS, S)

# asynchronously calculate the option values

optionValues.append(value)

return optionValues

The case of parallel execution.

def execution():

optionValues = parValue() # calculate all values

print "Submitted tasks %8d" % len(optionValues)

c.wait(optionValues)

return optionValues

t0 = time()

optionValues = execution()

t1 = time(); d2 = t1 - t0

print "Duration in Seconds %8.3f" % d2

print "Options per Second %8.3f" % (n / d2)

print "Speed-up Factor %8.3f" % (d1 / d2)

Submitted tasks 100 Duration in Seconds 3.109 Options per Second 32.165 Speed-up Factor 2.019

Interactive Financial Analysis¶

Maximizing an analyst's output given his daily (weekly, monthly) capacity.

Daily Stock Price Data¶

Reading and analyzing daily stock price data.

import pandas as pd

import pandas.io.data as pdd

AAPL = pdd.DataReader('AAPL', data_source='yahoo', start='1/1/2003')

# retrieve Apple stock prices from Yahoo Finance since 2003

AAPL['60d'] = pd.rolling_mean(AAPL['Close'], window=60)

AAPL[['Close', '60d']].plot(grid=True)

<matplotlib.axes.AxesSubplot at 0x10e023650>

High-Frequency Stock Price Data¶

Reading intraday, high-frequency data from a Web source, resampling and plotting it.

url = 'http://hopey.netfonds.no/posdump.php?date=20131007&paper=AAPL.O&csv_format=csv'

AAPL = pd.read_csv(url, index_col=0, header=0, parse_dates=True)

AAPL

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 13597 entries, 2013-10-07 10:00:01 to 2013-10-07 22:20:54 Data columns (total 6 columns): bid 13597 non-null values bid_depth 13597 non-null values bid_depth_total 13597 non-null values offer 13597 non-null values offer_depth 13597 non-null values offer_depth_total 13597 non-null values dtypes: float64(2), int64(4)

Tasks that are quite complex in general are easily implemented, like resampling.

AAPL_10min = AAPL.resample(rule='10min', how='mean')

AAPL_10min['bid'].plot()

<matplotlib.axes.AxesSubplot at 0x10e067c90>

A real headache of data scientists – missing data – is easily coped with.

AAPL_10min['bid'].fillna(method='ffill').plot()

<matplotlib.axes.AxesSubplot at 0x100786190>

Quality¶

Doing new things.

Better Investment Decisions through Regulation¶

Using Python to making contractual features of ABS transparent.

To increase the ability of investors to assess the quality and mechanisms behind Asset Backed Sercurities (ABS) – those responsible for the financial crisis of 2007/2008 – the Securities and Exchange Commission (SEC) proposed in 2010 to use Python Programs (with XML files) to exactly describe their contractual Flow of Funds ("waterfall").

“We are proposing to require that most ABS issuers file a computer program that gives effect to the flow of funds, or 'waterfall,' provisions of the transaction. We are proposing that the computer program be filed on EDGAR in the form of downloadable source code in Python. Python, as we will discuss further below, is an open source interpreted programming language. Under our proposal, an investor would be able to download the source code for the waterfall computer program and run the program on the investor’s own computer (properly configured with a Python interpreter). The waterfall computer program would be required to allow use of the asset data files that we are also proposing today. This proposed requirement is designed to make it easier for an investor to conduct a thorough investment analysis of the ABS offering at the time of its initial investment decision.”

Source SEC Proposal (2010), pp. 205-206.

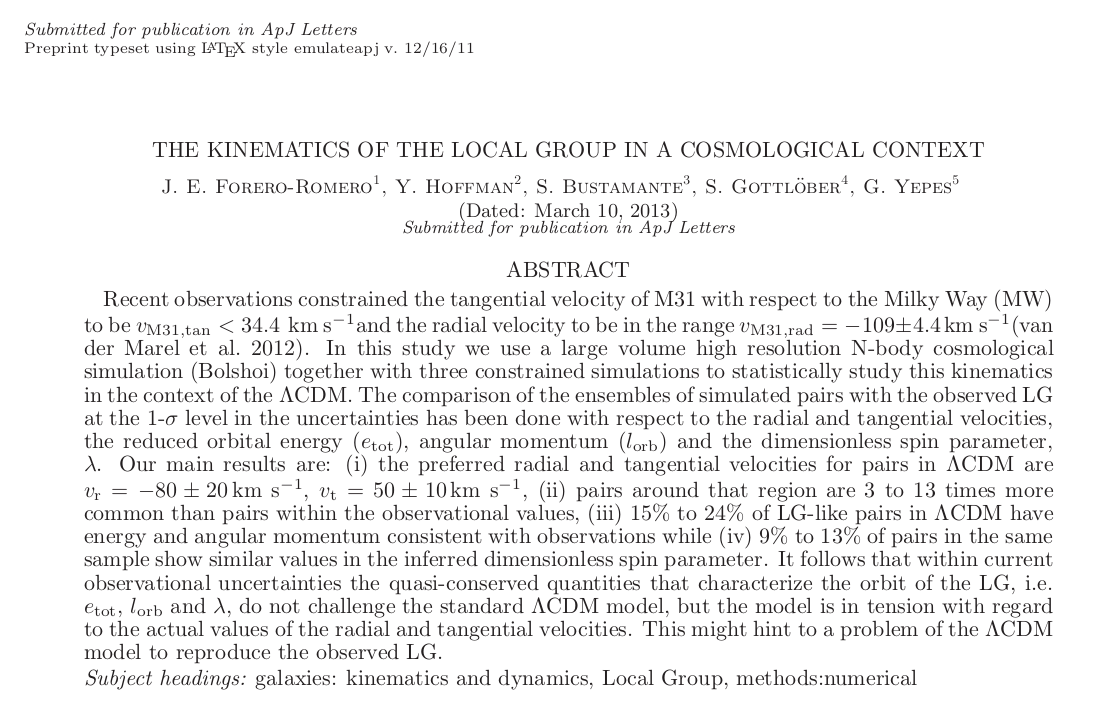

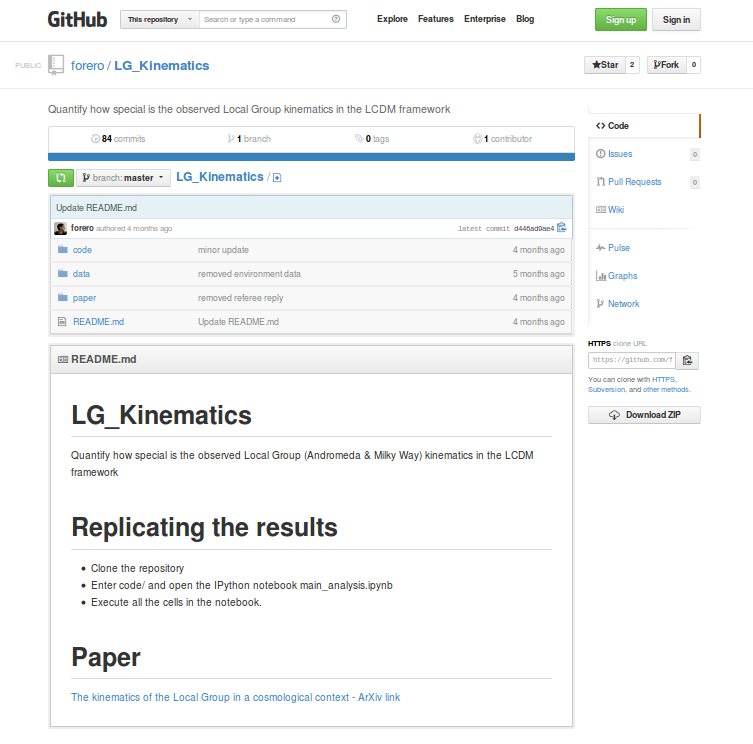

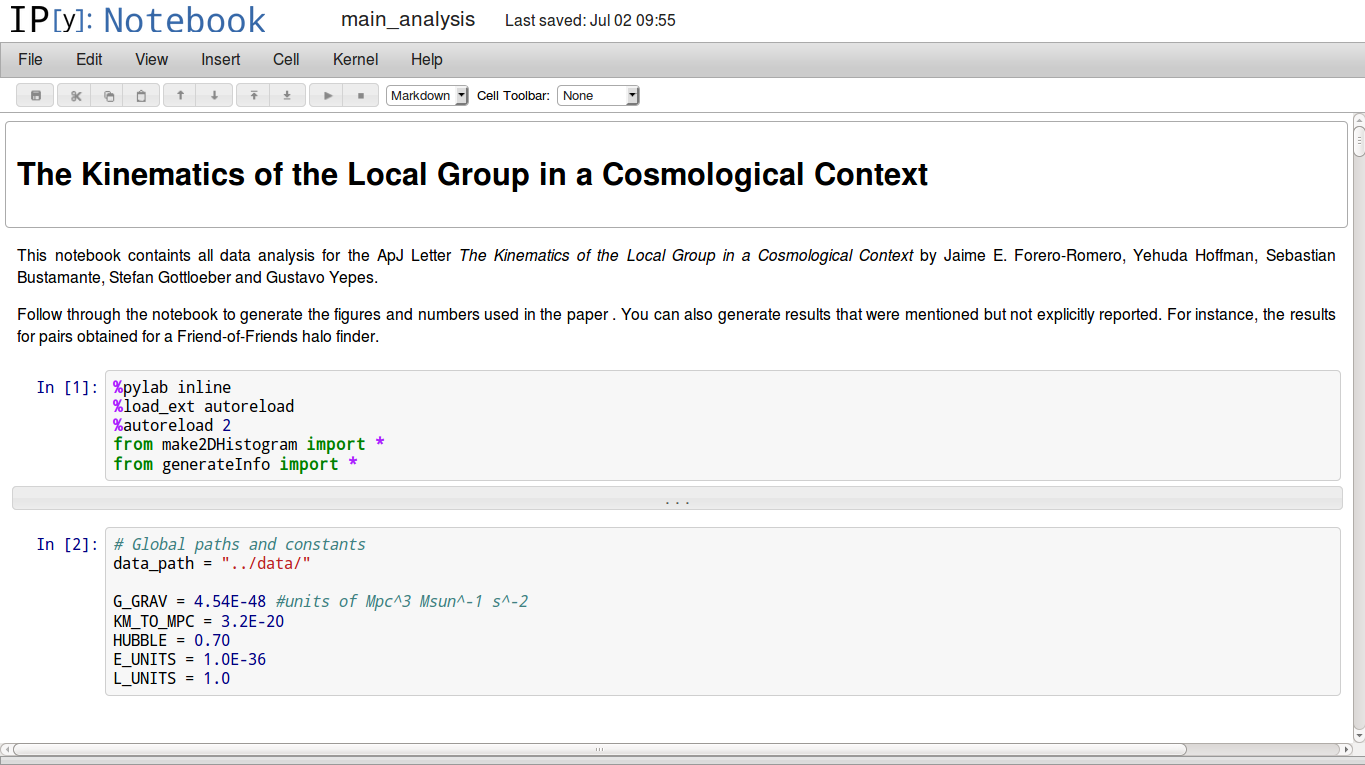

Analyzing and Explaining the Universe¶

Using Python for Cosmological and Open Research

Source www.arxiv.org.

Source www.github.com.

Source www.github.com.

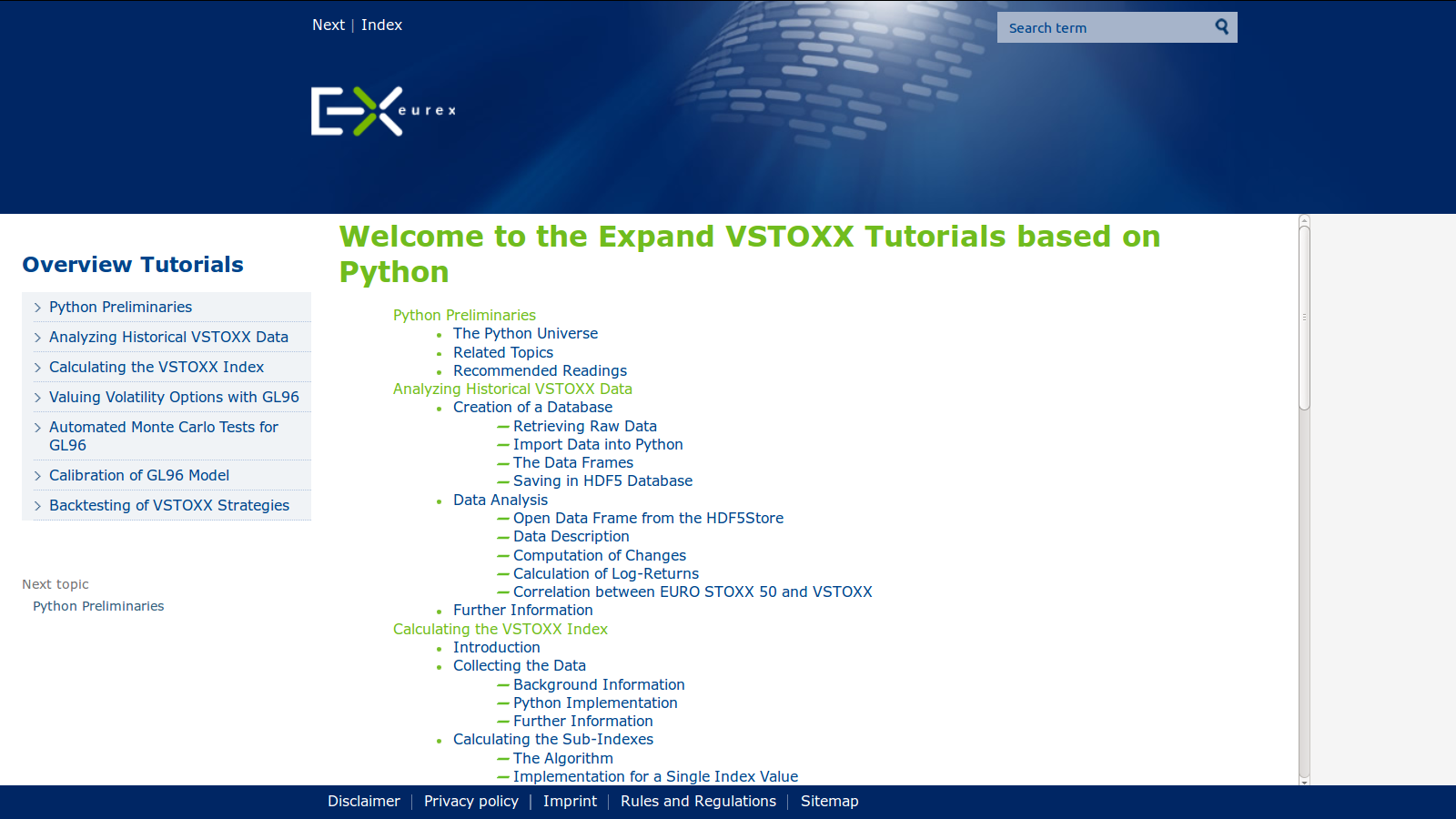

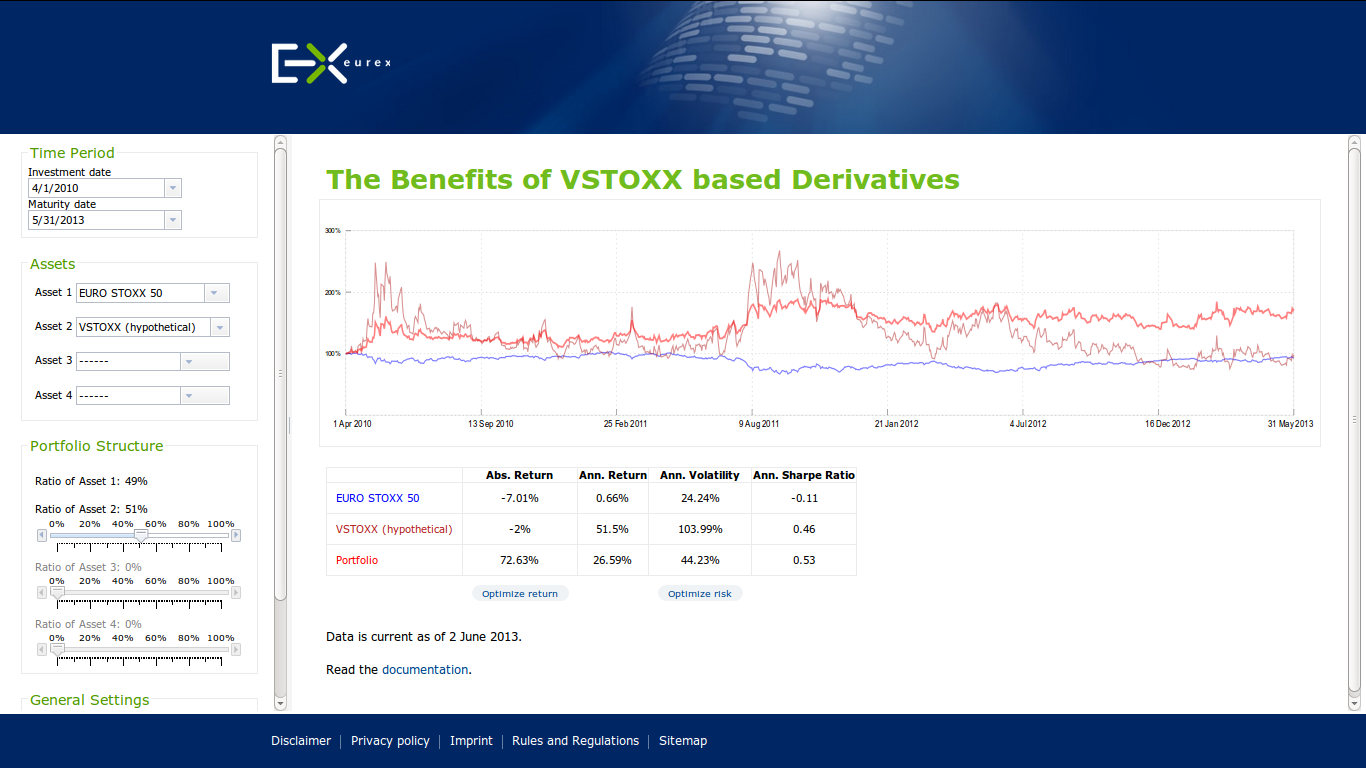

Value Added Services with Python¶

Using Python to communicate financial theory and derivative product features.

Eurex, one of the leading derivatives exchanges, recently launched a new Python-based marketing initiative called VSTOXX® Advanced Services.

"VSTOXX® Advanced Services

Benefits for professional trading. Free!

Futures and options on the VSTOXX® give investors and traders a targeted and leveraged means to take a view on European volatility, based on the implied volatility derived from EURO STOXX 50® Index Options. Portfolio diversification and optimizing volatility exposure are amongst the strongest reasons to access volatility via VSTOXX® derivatives.

Our advanced VSTOXX® Services offer a number of added benefits for professional trading. On the following pages you will find a wealth of information – tutorials, scripts and tools for back testing – that help you efficiently analyze data related to the VSTOXX® volatility index, understand how the VSTOXX® volatility index is calculated and how you can value derivatives written on the VSTOXX® index."

Source www.eurexchange.com.

Source www.eurexchange.com.

Source www.eurexchange.com.

The VSTOXX® Advanced Services do

- provide an educational one-stop offering regarding the VSTOXX® and related derivatives

- provide the necessary theoretical background in a concise yet complete fashion

- provide Python scripts that are self-contained, illustrate the theoretical concepts and that are immediately applicable

In economic terms, the project obviously shall facilitate trading in the respective products to increase liquidity. This should be mainly reached by lowering barriers of entry to this cutting edge product class.

The main tool used is "applicable transparency" through providing executable Python scripts.

Major target groups are:

- traders

- investors

- risk managers

- quants

- developers

- researchers

- teachers/students

Why Python?

- Open Source

- Concise "Mathematical" Syntax

- Financial Features

- General Purpose Language

- Long-Term Strategic View

Which libraries & tools?

- NumPy

- pandas

- SciPy

- matplotlib

- PyTables

- Sphinx

... the standard scientific stack.

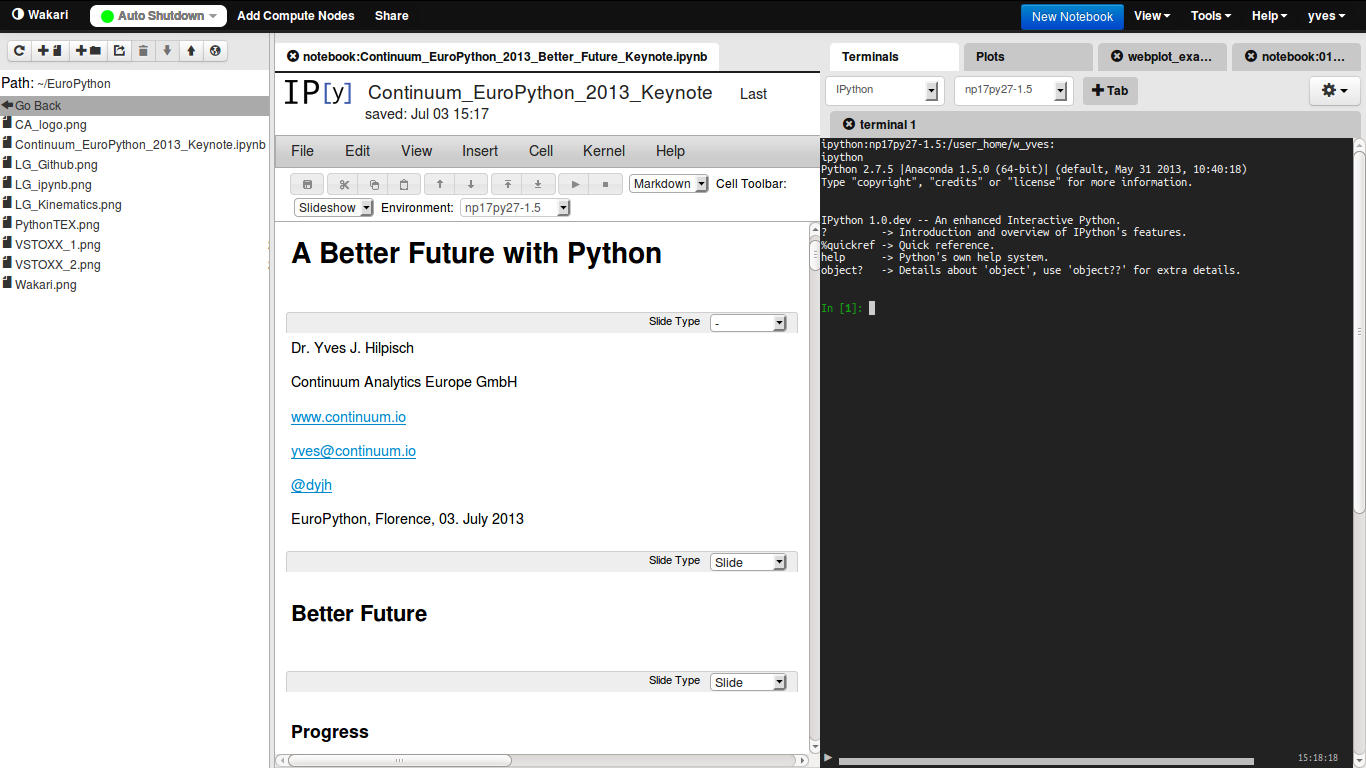

Web-based, Collaborative Data Analytics¶

... and Development, Visualization, Presentation, Publication, Documentation

Source www.wakari.io.

Maybe Our Biggest Challenge ...¶

... to make the use of Python as simple and reliable as possible

As a community, we should put our emphasis, among others, maybe even more on topics like:

- Education – at schools, universities, in trainings, through books

- Installation – distribution (e.g. Anaconda), Web services (e.g. Wakari.io), packaging (e.g. Binstar.org)

- Standardization – language (2 vs. 3), style (PEP 8), documentation (Sphinx)

- Building Services – professionally supporting libraries, packages, distributions

The Python Quants GmbH – Experts for Python in Quant Finance¶

The Python Quants GmbH – the company Web site

Dr. Yves J. Hilpisch – my personal Web site

Derivatives Analytics with Python – my new book

Read an Excerpt and Order the Book

Contact Us